Module 7 Common statistical problems

Many statistical methods assume that data follow certain distributions, and we have already explored methods for making sure your data follow those distributions. Other problems are not so easy to diagnose or fix. This section will introduce you to some methods for dealing with more subtle data problems. Note that none of these issues have one-size-fits-all solutions. Dealing with these issues will require understanding your study system and thinking through the consequences of potential solutions.

7.1 Outliers and erroneous values

Outliers are values that much larger or smaller than other values in the data set. While these values are theoretically possible in any distribution, they are by their nature extremely unlikely. Outliers can cause problems with many statistical tests because they are often given undue weight. This is because deviations from the mean are often squared.

There are two problems with outliers: how to detect them, and what to do with them.

7.1.1 Detecting outliers

There are several definitions of outlier. Some researchers have suggested simple measures such as Z-scores to detect outliers. Z-scores measure how many standard deviations (SD) an observation is away from the mean. The Z-score of an observation \(x_i\) (observation i of variable x) is calculated as:

\[Z\left(x_i\right)=\frac{x_i-\bar{x}}{\sigma\left(x\right)}\]

where \(\bar{x}\) is the mean of x and \(\sigma\left(x\right)\) is the SD of x. For example, Z-scores of -2.3 and +4.1 indicate observations 2.3 SD below and 4.1 SD above the mean, respectively. Z-scores are also referred to as sigmas, referring to the symbol for SD. E.g., a 4\(\sigma\) observation is 4 SD away from the mean. Various \(\sigma\) levels have been used to detect outliers, in which researchers discard any observation 2\(\sigma\), 3\(\sigma\), etc., away from the mean. This threshold varies by discipline, so follow the conventions in your field.

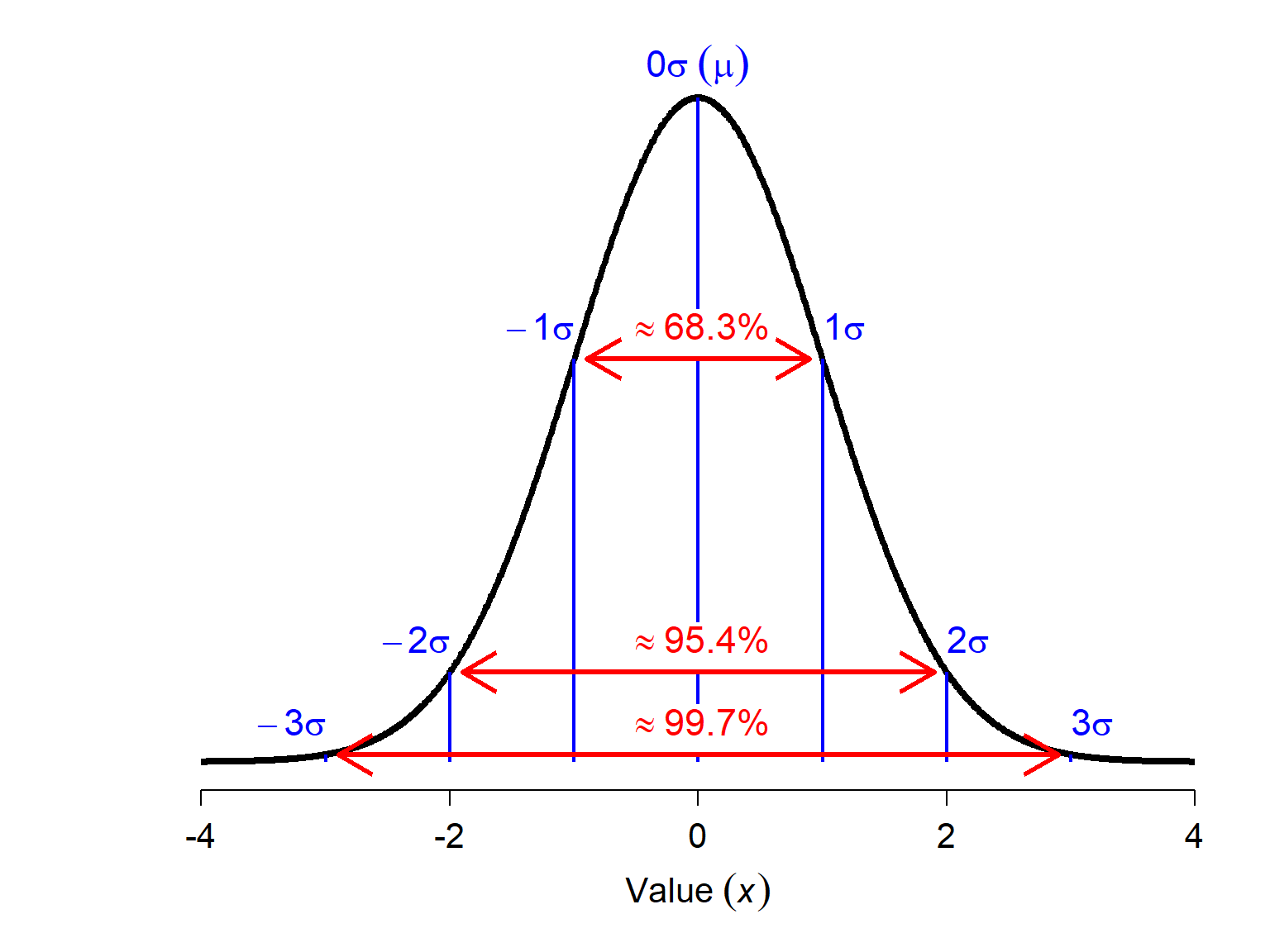

The figure below shows Z-scores relative to the standard normal distribution (\(\mu\) = 0, SD = 1). About 68% of observations are expected to have a Z-score between -1 and 1–i.e., deviate by \(\le\) 1 \(\sigma\). About 95% of observations should be \(\le\) 2 \(\sigma\), and over 99% of observations should be \(\le\) 3 \(\sigma\). The greater the magnitude of a value’s Z-score, the less likely it is. Values with > 3 or 4 \(\sigma\) should almost never occur. Thus, observations with large-magnitude Z-scores may turn out to be outliers.

It must be noted that a large magnitude Z-score does not prove that an observation is an outlier. Like p-values, Z-scores are just a heuristic for making decisions. Don’t just delete any observation \(|Z|\ge\) 3; examine those observations to see if they are legitmate and don’t take any action without a good biological reason.

7.1.2 Dealing with outliers

The easiest way to deal with outliers is simply to delete them. This can be fine if you have a lot of data–many 1000s or even millions of records. I’ve worked with several statisticians who routinely delete observations with response variables in the top and bottom 5 percentiles. This may not always be an appropriate strategy in biology. For one thing, outliers can occur for completely legitimate reasons and deleting them would be discarding important information about the study system.

Never delete an observation based solely on its magnitude.

Data should only be deleted when there is a biological or experimental reason. These might include experimental errors, inappropriate sampling, extraordinary field conditions, etc. Furthermore, you should always document when observations were deleted and why, and disclose that information in your write up and to reviewers. The possibility of including supplemental information or documents with manuscripts leaves little excuse not to be transparent about what observations you deleted and why.

Another way to deal with outliers is to transform the data. Variance-stabilizing transformations, such as the log-transform, often have the side-effect of reducing outliers in the data.

The third method for dealing with outliers is censoring. Censoring data in statistics means analyzing data as either the measured value or the interval in which the value falls. For example, if a mouse is weighed with a scale that goes up to 60 g, and maxes out the scale, we record the mouse as weighing \(\ge\) 60 g. Statistical methods for censored data have been developed but are outside the scope of this course. One popular form of censoring is called Winsorizing, where values below the 5th percentile are set to the 5th percentile and values above the 95th percentile are set to the 95th percentile. Censoring is common practice in fields involving certain types of instrumentation. For example, many spectrophotometers (e.g., microplate readers) record optical density (OD) as the amount of light passing through a specimen as a measurement of concentration. Most machines have a lower limit to the OD that they can detect. Observations below this minimum OD might be censored to 0, or to some pre-determined fraction of the minimum detectable OD.

Similar to censoring, outlying data can be removed by truncation. Truncation in statistics means ignoring values outside of a certain range. As with deletion, you must be very explicit about what criteria were used to truncate the data.

Weighting is a strategy where observations can be given greater or lesser weight to adjust how much influence they have. This strategy can become very complicated and involve a lot of arbitrary decisions. So, a simpler strategy such as truncation or transformation might be more appropriate.

Finally, researchers can use analysis strategies that are less sensitive to outliers. For example, robust statistics use absolute deviations rather than squared deviations. This gives outliers much less influence than under conventional methods based on sums of squared errors. Nonparametric methods, particularly those based on rank order, can also be less sensitive to outliers.

Whatever strategy you choose to deal with your outliers, you need to be aware of how much your decision affected the outcome. It is a good idea to analyze your data both with and without suspected outliers to measure how much influence they really have. If the analysis returns the same results with and without the outliers, leave them in.

7.2 Autocorrelation

Autocorrelation describes a phenomenon where set of values is correlated with itself, with the strength of that correlation determined by the distance between observations. In biology, the “distance” that defines autocorrelation is often spatial, temporal, or phylogenetic.

| Autocorrelation type | Distance measure | Explanation |

|---|---|---|

| Spatial | Space (e.g., km) | Samples from sites that are close together in space are more similar to each other than they are to sites that are farther away, just because they are closer to each other. |

| Temporal | Time (e.g., days) | Observations taken closer together in time are more similar to each other than they are to observations separated by more time. |

| Phylogenetic | Relatedness | Taxa that share a more recent common ancestor (i.e., are more closely related) are more similar to each other than they are to taxa that are less closely related to each other. |

The main consequence of autocorrelation is similar to the problems associated with pseudoreplication: because observations are not independent, the statistically relevant number of observations (degrees of freedom) is smaller than the nominal number of observations. This means that the power of the test is inflated. A related idea is that the residuals of a statistical model can be autocorrelated. In that case, the model violates the assumption of independent residuals shared by most statistical methods. Autocorrelation can be controlled for if you have some idea of its source and nature.

7.2.1 Graphical methods to identify autocorrelation

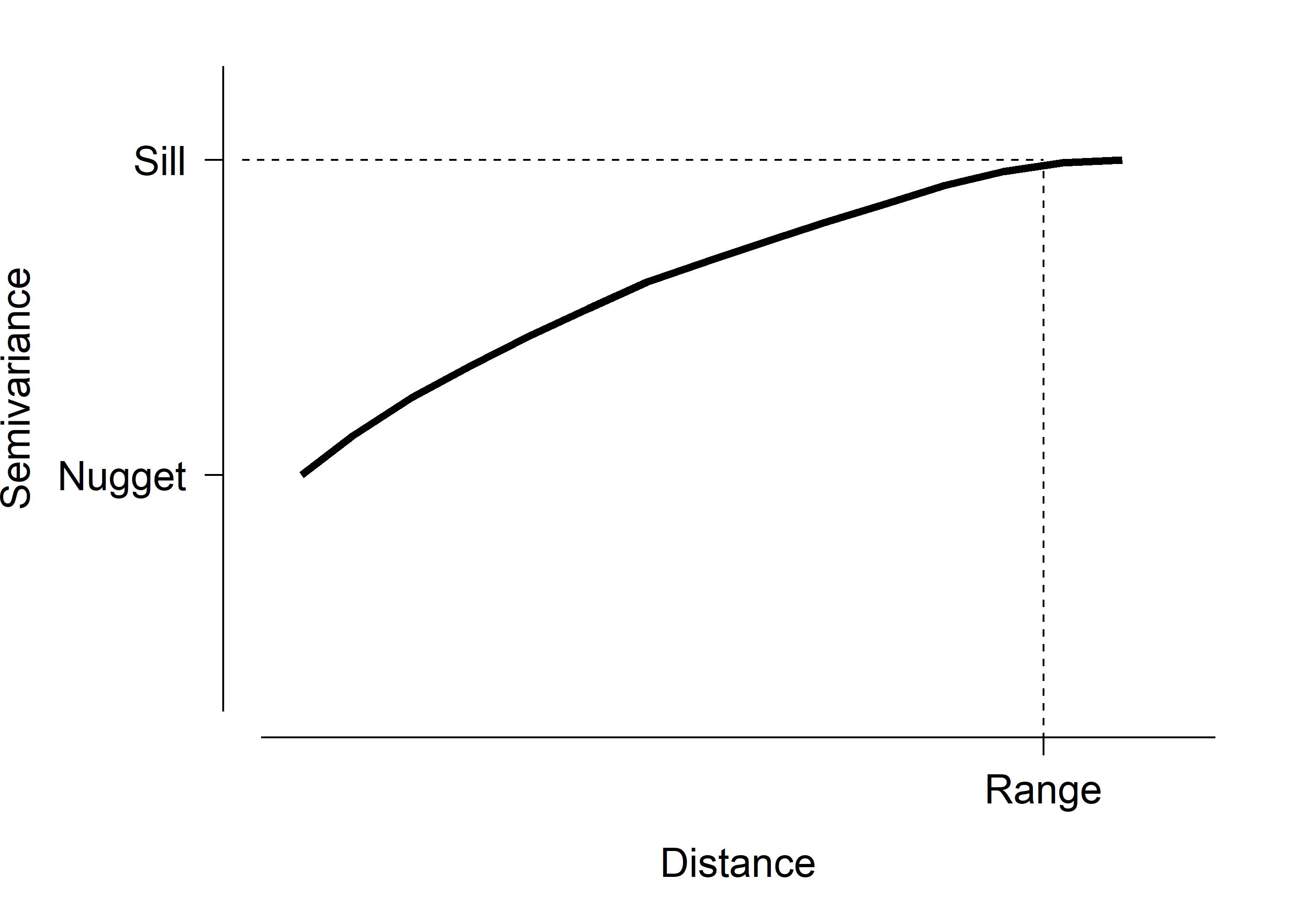

One way to explore potential autocorrelation is to construct a variogram. A variogram plots the squared differences between samples against their distance (temporal, spatial, etc.). A semivariogram uses half of that variance. An example semivariogram is below.

This figure shows some important quantities on the semivariogram:

- Nugget: The variance or semivariance at 0 distance. I.e., the y-intercept. Variation at zero distance represents other sources of error, such as sampling error, demographic stochasticity, etc.

- Sill: The variance or semivariance at which the curve gets close to its maximum or asymptotic value.

- Range: The distance at which the variance or semivariance reaches the sill.

Semivariograms should always increase, at least initially, because closer observations should be similar to each other simply as a matter of proximity. Samples separated by distances less than the range are autocorrelated. Semivariograms sometimes reach a peak and then start decreasing, or varying up and down. The initial peak is usually interpreted as the sill, and the distance when the curve reaches the sill is considered the range. The importance and interpreation of the semivariogram is something that you, as a biologist, have to figure out.

7.2.2 Mantel tests for autocorrelation

Spatial autocorrelation is a particular problem for ecologists and environmental biologists. The Mantel test can be used to measure spatial autocorrelation as the correlation between distance in space and distance in terms of the measured variables. The Mantel test can be thought of as a multivariate generalization of linear correlation that measures correlation between two matrices. Whereas linear correlation measures how variation in one variable is related to variation in another variable, the Mantel test measures how variation in one matrix (i.e., set of variables) is related to variation in another matrix.

The application to spatial autocorrelation is obvious. The spatial distance matrix can be calculated from coordinates such as latitude or longitude63, and compared to the biological distance matrix. When other types of autocorrelation are of interest, you can calculate a distance matrix based on time or on phylogenetic relatedness, or whatever else might be driving the autocorrelation.

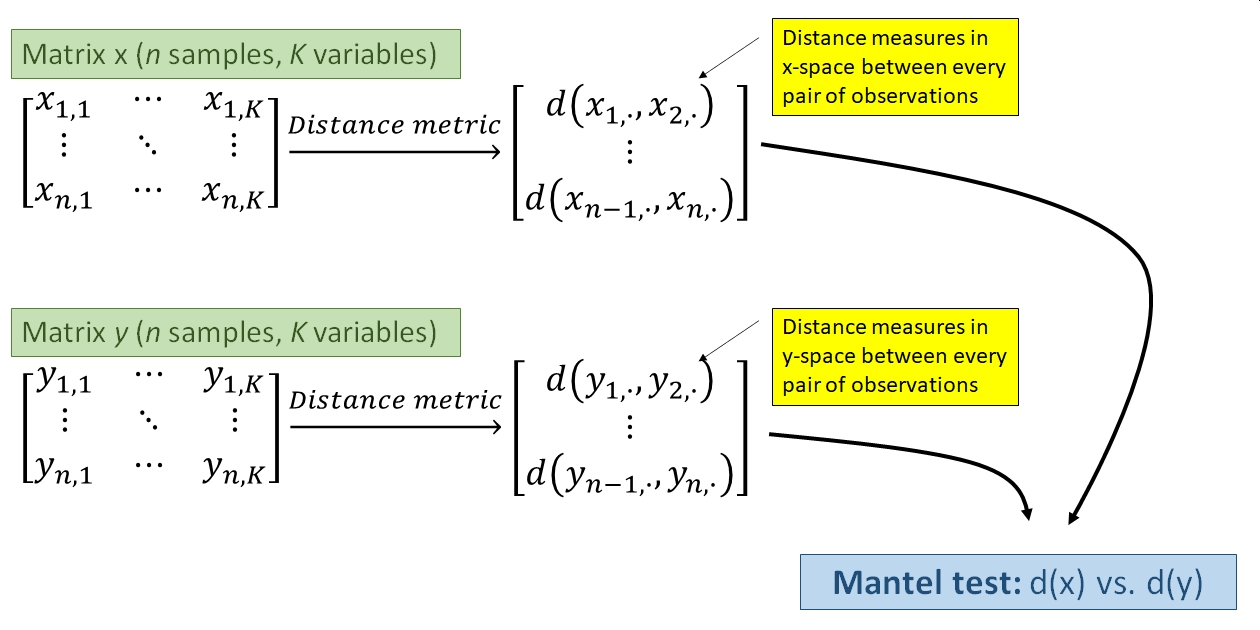

The Mantel test works by first calculating two distance matrices, which define the “distances” or “dissimilarities” between each sample. One distance matrix relates to spatial, temporal, or phylogenetic distance. The other relates to dissimilarity in terms of the measured variables. The dissimilarity metric incorporates information about how much any pair of observations differ in terms of every variable represented in the matrix. The Mantel correlation coefficient r is the linear correlation coefficient (like Pearson’s r) between the two distance matrices. It answers the question, “How is variation in this set of variables related to distance in space (or time, or phylogeny, etc.)?”.

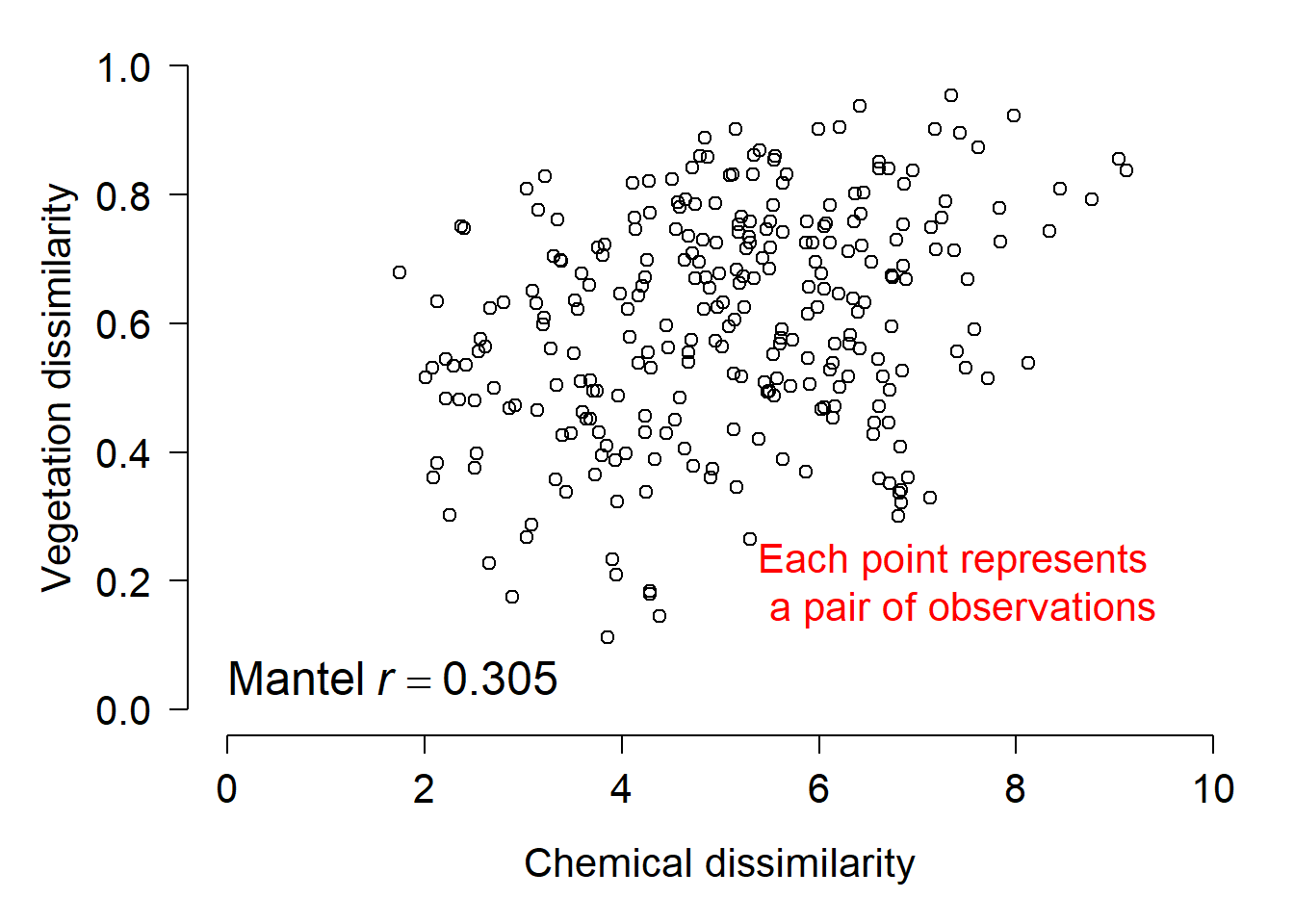

The example below illustrates another use for the Mantel test: measuring association between two sets of variables taken from the same sample units. In this example, dissimilarity in terms of species composition is related to dissimilarity in soil chemical characteristics.

library(vegan)

## Warning: package 'vegan' was built under R version 4.3.3

## Loading required package: permute

## Warning: package 'permute' was built under R version 4.3.3

## Loading required package: lattice

## This is vegan 2.6-6.1

# biological data

data(varespec)

# soil chemistry data

data(varechem)

x <- varechem

y <- varespec

dy <- vegdist(varespec) # Bray-Curtis metric

dx <- vegdist(scale(varechem), "euclid") # Euclidean metric

mantel(dx, dy)

##

## Mantel statistic based on Pearson's product-moment correlation

##

## Call:

## mantel(xdis = dx, ydis = dy)

##

## Mantel statistic r: 0.3047

## Significance: 0.002

##

## Upper quantiles of permutations (null model):

## 90% 95% 97.5% 99%

## 0.126 0.153 0.176 0.213

## Permutation: free

## Number of permutations: 999

par(mfrow=c(1,1), mar=c(5.1, 5.1, 1.1, 1.1),

bty="n", lend=1, las=1, cex.axis=1.3, cex.lab=1.3)

plot(dx, dy, xlab="Chemical dissimilarity",

ylab="Vegetation dissimilarity",

ylim=c(0,1), xlim=c(0, 10))

text(0, 0.05,

expression(Mantel~italic(r)==0.305),

adj=0, cex=1.5)

text(5.38, 0.2,

"Each point represents \n a pair of observations",

cex=1.3, adj=0, col="red")

The figure above shows the result visually. The Mantel r statistic describes the sign and magnitude of the correlation between dissimilarity in terms of chemistry (x-axis) and dissimilarity in terms of the plant community (y-axis). It is interpreted just like Pearson’s r. In the plot it is important to realize that each point represents a pair of observations, plotted by the dissimilarities between them. The correct interpretation of this plot is that samples with similar soil chemistry tend to also have similar plant communities. Alternatively, you could say that the larger the difference in soil chemistry, the larger the difference in plant communities (or something like that).

7.2.3 Dealing with autocorrelation

Autocorrelation may or may not be a problem in your dataset. As pointed out by Kuehn and Dormann (2012), there is a difference between autocorrelation in a response variable and autocorrelation in the residuals of a statistical model. Autocorrelation in a response variable can be managed by inclusion of appropriate predictor variables (which may themselves be autocorrelated or correlated; see next section). This works because if autocorrelated values can be predicted by a predictor variable, then the autocorrelation is controlled for, by definition.

Autocorrelation in residuals, however, represents a pattern in the data that is not accounted for. There are methods for accounting for autocorrelated residuals such as conditional autoregressive models (CAR) (Gelfand and Vounatsou 2003), generalized or ordinary least squares models (GLS or OLS), classification and regression trees or CART (McCune et al. 2002), and mixed models (Bolker et al. 2009). None of these solutions is appropriate for every situation.

7.3 Collinearity

Sometimes we face the problem of having predictor variables that are not only related to the response variable, but also related to each other. This phenomenon is called collinearity. In large datasets the problem becomes multicollinearity, when many variables are related to each other. Including collinear predictor variables in an analysis can severely bias the parameter estimates of that analysis (e.g., regression coefficients) and as a result make the results misleading or not generalizable. The effects of collinear predictor variables are by definition confounded with each other, meaning that the effects cannot be separated (Dormann et al. 2013).

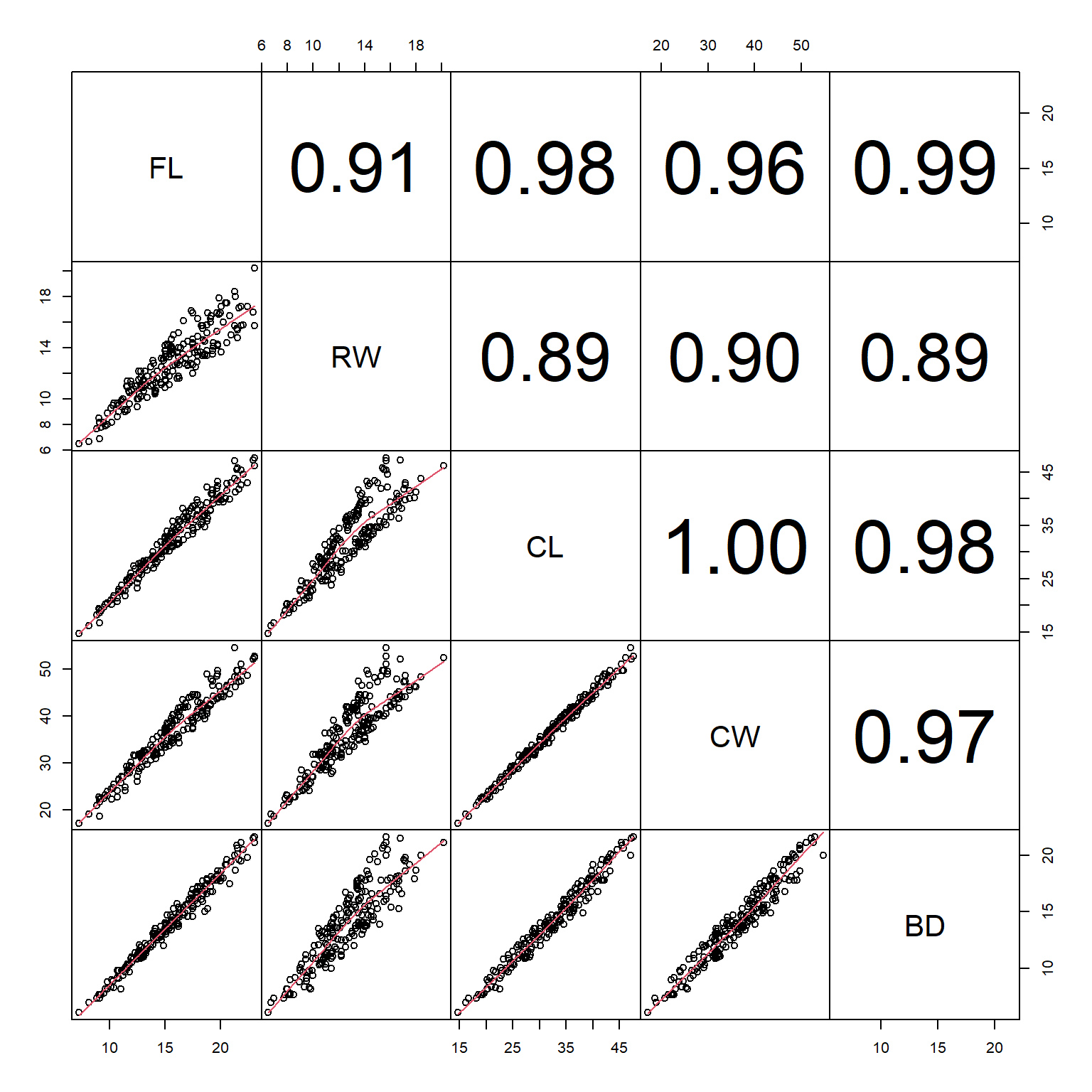

The most straightforward way of detecting collinearity is with scatterplot matrices and correlation coefficients (see here). This is a technique to visualize how many variables in a dataset relate to each other simultaneously. As shown in the linked section, replacing the upper triangle of boxes with the correlation coefficients can help detect potentially problematic pairs of variables. The figure below shows an example from the crabs dataset in package MASS. In this dataset, all of the variables are highly correlated with each other.

If you have many variables, then a figure like the one above can be hard to read or interpret. One solution might be to create a table of correlation coefficients between every pair of variables. The base function cor() can do this, but a better-formatted table takes a little more work.

## FL RW CL CW BD

## FL 1.0000000 0.9069876 0.9788418 0.9649558 0.9876272

## RW 0.9069876 1.0000000 0.8927430 0.9004021 0.8892054

## CL 0.9788418 0.8927430 1.0000000 0.9950225 0.9832038

## CW 0.9649558 0.9004021 0.9950225 1.0000000 0.9678117

## BD 0.9876272 0.8892054 0.9832038 0.9678117 1.0000000# better table:

vars <- names(x)[dat.cols]

# generate every unique pair of two variables by name:

cx <- data.frame(t(combn(vars, 2)))

# calculate correlation coefficients for each pair:

cx$r <- NA

for(i in 1:nrow(cx)){

cx$r[i] <- cor(x[,cx$X1[i]], x[,cx$X2[i]])

}

cx## X1 X2 r

## 1 FL RW 0.9069876

## 2 FL CL 0.9788418

## 3 FL CW 0.9649558

## 4 FL BD 0.9876272

## 5 RW CL 0.8927430

## 6 RW CW 0.9004021

## 7 RW BD 0.8892054

## 8 CL CW 0.9950225

## 9 CL BD 0.9832038

## 10 CW BD 0.9678117The resulting table can then be sorted or filtered by r to identify pairs of variables that are correlated with each other.

Dormann et al. (2013) suggest to use a cutoff of \(|r|\) in between 0.5 and 0.7 to identify collinear pairs of variables. When a pair of variables are identified as collinear, the easiest solution is to simply run the analysis without one of them. This is because the variables are redundant, and any conclusions made using one would also be obtained using the other.

7.4 Missing data

The last target of exploratory data analysis that we will explore is missing data. Data can be missing for many reasons. Equipment failures, human error, natural disasters, and other causes can cause values in your dataset to go missing or otherwise be invalid. How to handle the missing data is up to you as a biologist: there is no one-size-fits-all statistical solution. The two basic ways to deal with missing data are to (1) not deal with it and (2) estimate the missing values.

7.4.1 Option 1: Ignore missing data

The easiest solution is simply to ignore missing data. Consider this dataset, which contains morphological data on 63 species of bats (Hutcheon et al. 2002). This dataset is modified from an example in the unit on nonlinear models. Notice that in this version the brain masses (brw) are missing for 3 species of bats.

Because there are a lot of data, one option for analyzing this dataset would be to simply ignore the missing observations. For example, if someone was interested in the relationship between body size (variable bow) and brain mass, they could just fit a model and R would (for most methods) automatically ignore missing values. Note that in this example both variables are log-transformed to ensure normality and positivity.

##

## Call:

## lm(formula = logbr ~ logbw, data = dat)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.82879 -0.16408 0.05009 0.19548 0.35881

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 3.96599 0.09476 41.85 <2e-16 ***

## logbw 0.75322 0.02881 26.14 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.2616 on 58 degrees of freedom

## (3 observations deleted due to missingness)

## Multiple R-squared: 0.9218, Adjusted R-squared: 0.9204

## F-statistic: 683.4 on 1 and 58 DF, p-value: < 2.2e-16The parameter estimates of intercept = 3.9659 and slope = 0.7532 are very close to the values obtained using the full dataset of 3.9685 and 0.7516. In this case, ignoring the missing data worked just fine.

7.4.2 Option 2: Interpolate or impute missing data

If you need to replace the missing data, then the best option is to interpolate or impute them. How exactly to do this depends on the question being asked. If there is a clear relationship between the variable with missing values and another variable, then you can use that relationship to estimate the missing values. You can also use multiple imputation to estimate the missing values using information from several related variables at once. The example below illustrates simple interpolation (aka: single imputation) to replace the missing brain weights using known body masses.

# flag missing observations

flag <- which(is.na(dat$brw))

# log transform (if not done already)

dat$logbw <- log(dat$bow)

dat$logbr <- log(dat$brw)

# fit model

mod1 <- lm(logbr~logbw, data=dat)

# predict missing logbr at logbw

px <- data.frame(logbw=dat$logbw[flag])

pr <- predict(mod1, newdata=data.frame(px))

# insert predicted values

dat$logbr[flag] <- pr

# back-transform to original scale

dat$brw[flag] <- exp(dat$logbr[flag])The interpolated values are similar to the true values, but with quite a bit of error. The term for this is “correct to an order of magnitude”.

## [1] 729.7784 507.7160 2138.6809true.vals <- c(597, 543, 2070)

# percentage bias in interpolated values:

100 * (dat$brw[flag]-true.vals) / true.vals## [1] 22.240932 -6.497972 3.317919Interpolation can be improved by predicting the missing values using additional variables. Multiple imputation, like any statistical model, can suffer from the effects of multicollinearity in the “predictors”, so be mindful that you don’t obtain–and use–biased estimates of your missing values. Penone et al. (2014) reviewed some strategies for imputing missing data in the context of life history traits, but the approaches could be applied to other areas of biology.

Literature Cited

When calculating a distance matrix using geographic coordinates, be sure to account for the curvature of the Earth. Often using unprojected latitude and longitude will produce a misleading distance matrix, because latitude and longitude are not equivalent.↩︎